Most segmentation from motion algorithms exploit one particular technique to achieve the task of image segmentation. These techniques either have the correspondence problem or the aperture problem, depending on whether they are based on feature matching or on spatio-temporal gradients of the grey value. While the aperture problem is inherent to the gradient methods, the correspondence problem can be reduced by using more complex, i.e. larger features. This, on the other hand, leads to low resolution.

The key idea of this project is to integrate information from Gabor- and Mallat-wavelet transform to overcome the aperture and the correspondence problems. The Gabor-wavelet transform can be used to compute image flow with high precision and reliability but poor spatial resolution. A histogram over the image flow field is then used to infer motion hypotheses, hypotheses about how many objects are in the scene and in which directions they are moving. The basic assumption is that objects move only translationally, though the system degrades gracefully as this assumption is violated. The motion hypotheses are then used to reduce the correspondence problem on the high resolution representation of the Mallat-wavelet transform. Integration over time helps to improve the reliability further. No object models are used, so that the algorithm is able to segment several objects of arbitrary shape; see the figures. No assumptions about motion continuity are made, so that the algorithm is able to track objects which jump back and forth arbitrarily; see Figure 2. Segmentation is only performed on edges, because only edges provide enough evidence for reliable motion estimation and edge information suffices to reconstruct images.

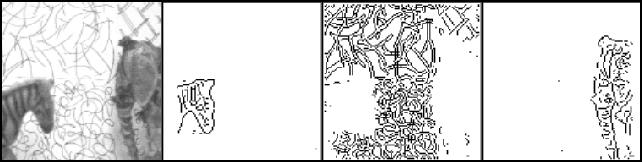

Figure 1: One frame of the moving-animal sequence and the segmentation result. The zebra moves to the right, the elephant to the left. The background is resting and is treated as just one more object.

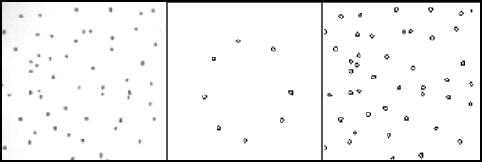

Figure 2: One frame of the dot-pattern sequence and the segmentation result. The pattern consists of a circle of eight dots and a moving background. In addition to the continuous motion each frame is arbitrarily displaced by up to about 6 pixels relative to the previous frame; images have 128x128 pixels.

Image sequences: Below you find the five image sequences I have used in my experiments presented in the publications. Feel free to use them for your research and scientific publications as long as you cite the source. The sequences are stored as animated .gif files. You can view them with your browser or xanim, for instance. You can decompose the sequences into single images with the command `convert -deconstruct sequence_name.gif sequence_name%02d.gif'. With `convert -deconstruct sequence_name.gif[14] sequence_name14.gif' you can select frame 14 only, for instance. The frames you see here on this page are the frames used in the Pattern Recognition paper.

Here are two more sequences not used for the publications.

I agree that it would be great if I could present also the segmentation results for these sequences as animated gifs. However, I haven't used the program since years now and I am reluctant to go through the hassle of recompiling and rerunning it.

Thanks go to Arne Jacobs in Bremen for technical assistance in converting my image sequences into animated gif files.