Results of the GECCO'2018 1-OBJ Track

"Raw" Result Data

On each problem participants were judged by the best (lowest) function value achieved within the given budget of function evaluations. There were 17 participants in the field. The best function value per problem and participant (1000 times 17 double precision numbers) is listed in this text file.

Participant Ranking

Participants were ranked based on aggregated problem-wise ranks (details here and here). The following results table lists participants with overall scores (higher is better) and the sum of ranks over all problems (lower is better) The table can be sorted w.r.t. these criteria.

| rank | participant | method name | method description | software | paper | score  |

sum of ranks  |

|---|---|---|---|---|---|---|---|

| 1 | Nbelkhir | Feature Based Algorithm Selection | Feature Based Algorithm Selection + algorithm scheduling: a set of 20 optimizers including CMA-ES, BFGS, ... | 1077.06 | 3898 | ||

| 2 | Danil Shkarupin | 878.68 | 4407 | ||||

| 3 | radka | 817.503 | 4747 | ||||

| 4 | avaneev | biteopt2017 | link | 744.583 | 4730 | ||

| 5 | jpsbook | 730.328 | 5093 | ||||

| 6 | Al Jimenez | 578.137 | 5951 | ||||

| 7 | Poly Montreal | MADS + VNS + NM | 509.345 | 6421 | |||

| 8 | Artelys | 275.369 | 9915 | ||||

| 9 | GERAD | PSD-MADS | Serial version of PSD-MADS, based on MADS and using the NOMAD software. | link | link | 244.434 | 7665 |

| 10 | mini-mlog | GAPSO | A swarm approach utilizing CPSO, SPSO, DE, Simplex and quasi-Newton methods | link | link | 140.54 | 8320 |

| 11 | Jeremy | Research algorithm | 105.573 | 8591 | |||

| 12 | LocalSolver | 38.6977 | 10664 | ||||

| 13 | kadiri | 10.7097 | 12314 | ||||

| 14 | djagodzi | 6.53201 | 13656 | ||||

| 15 | anonymous | 3.63873 | 14834 |

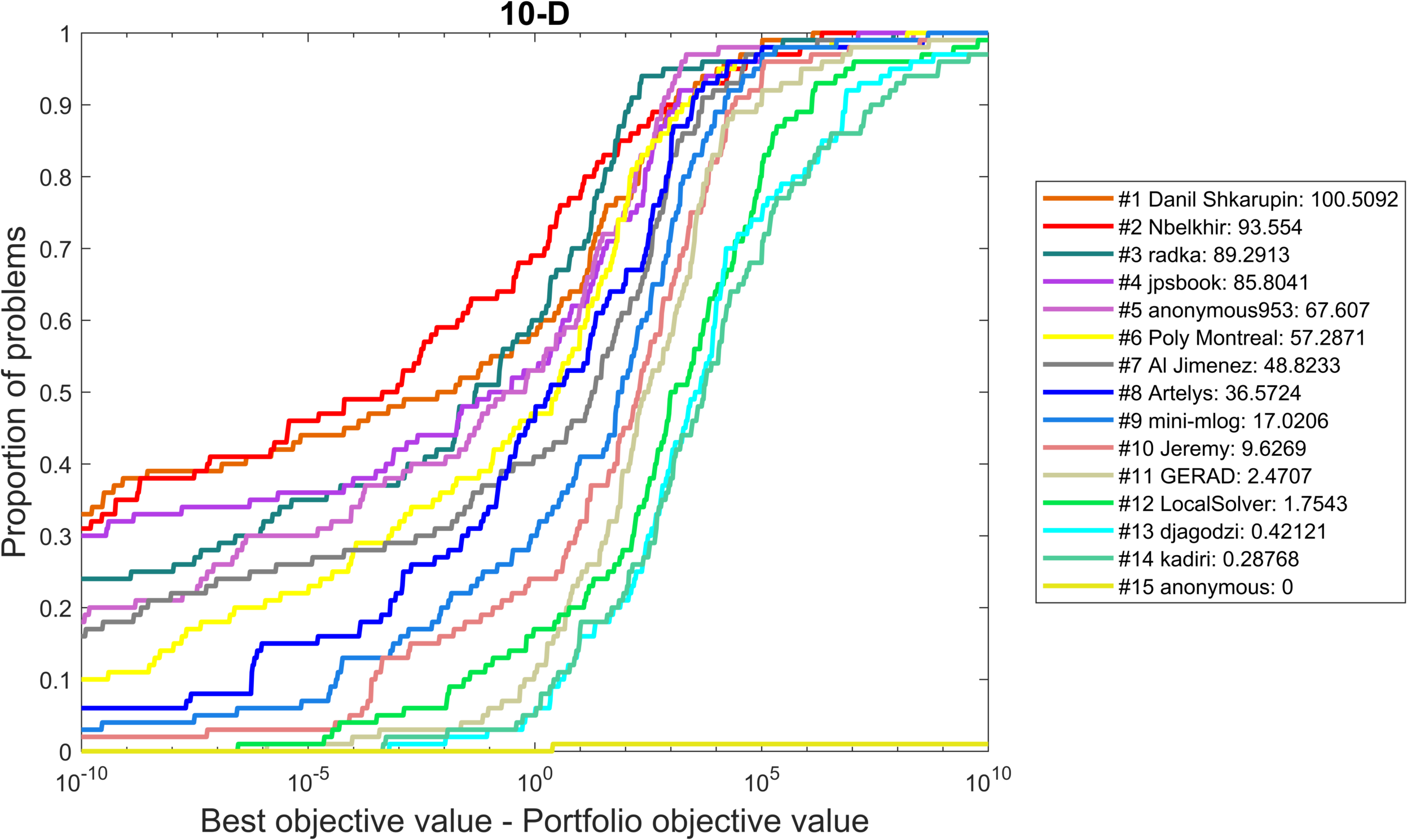

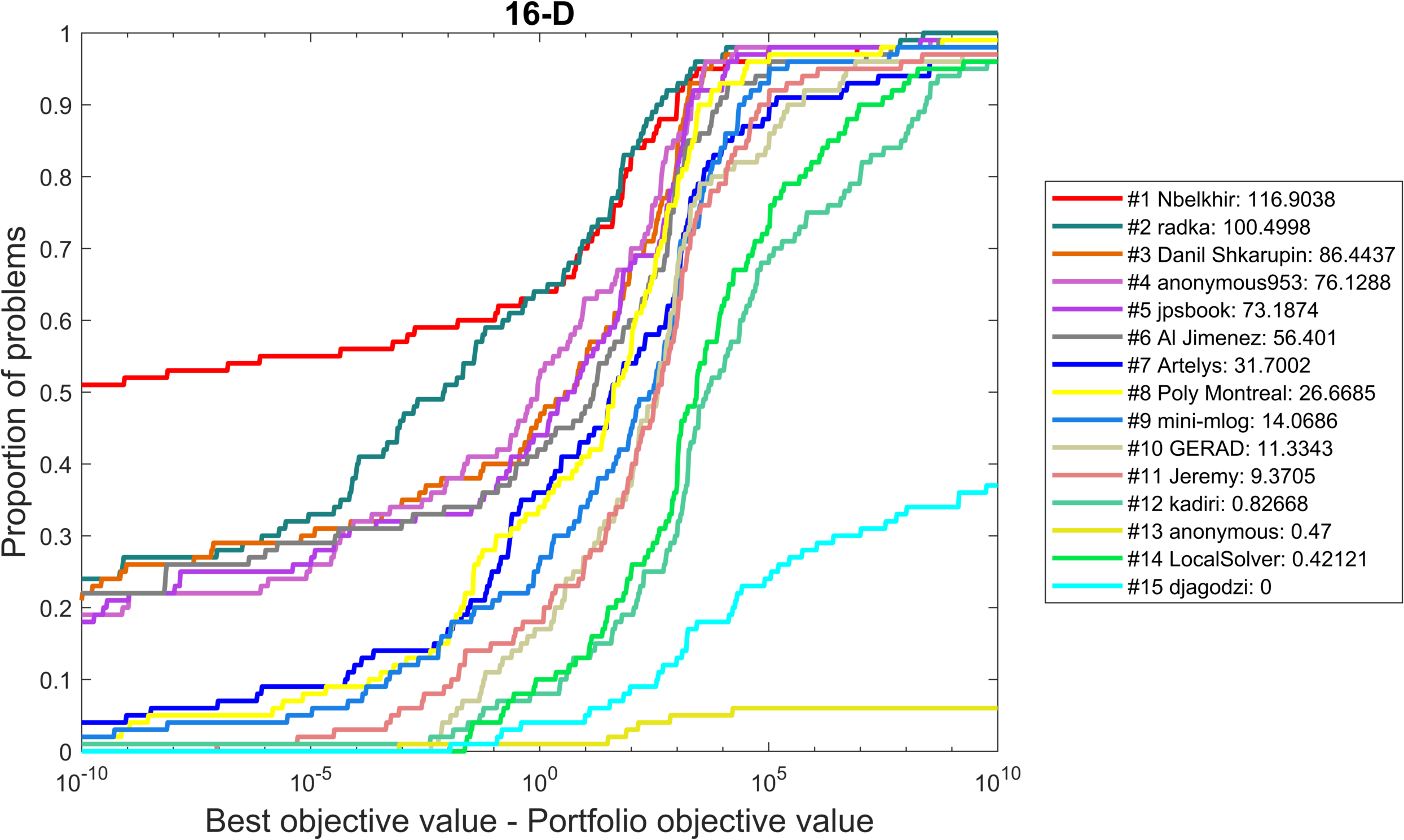

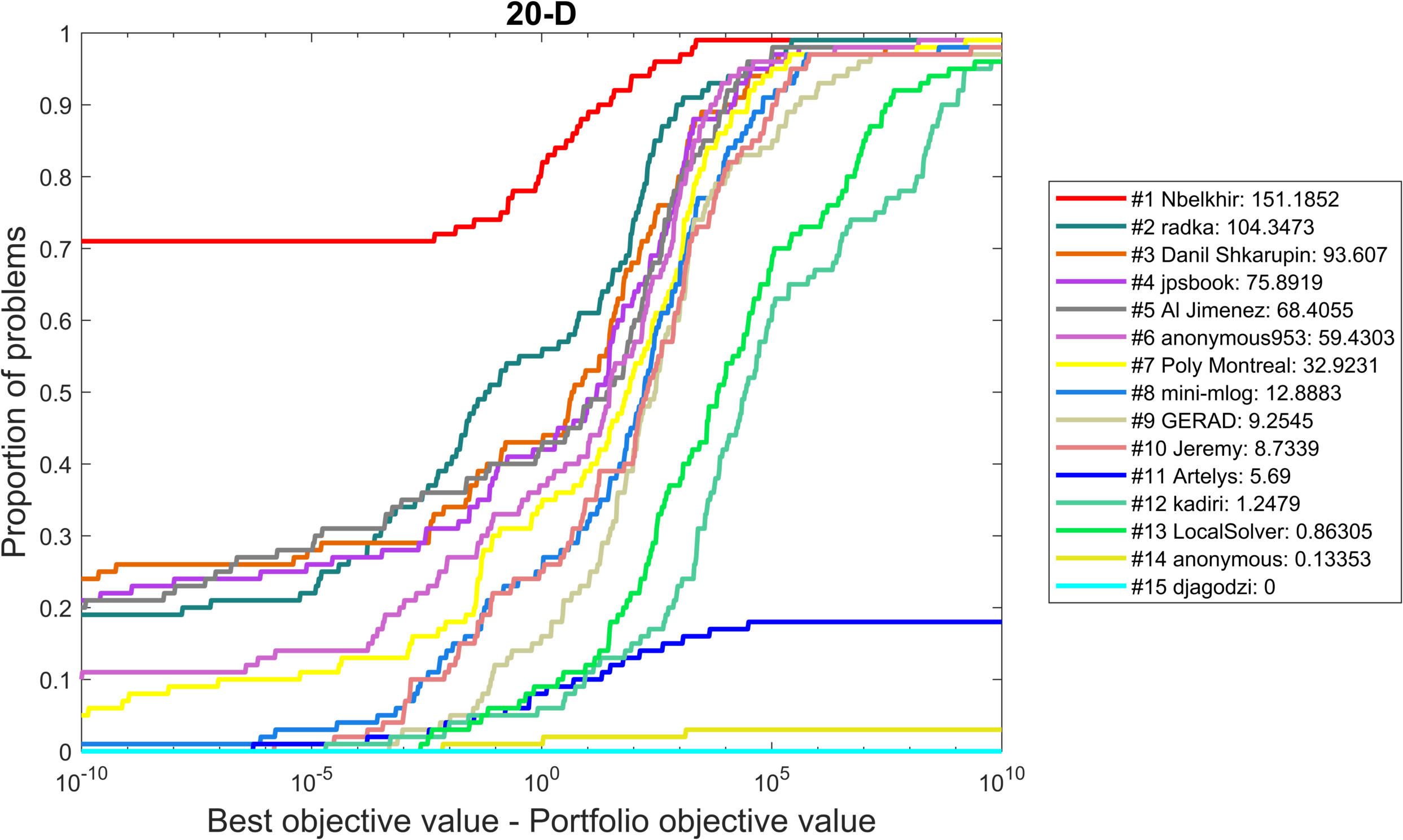

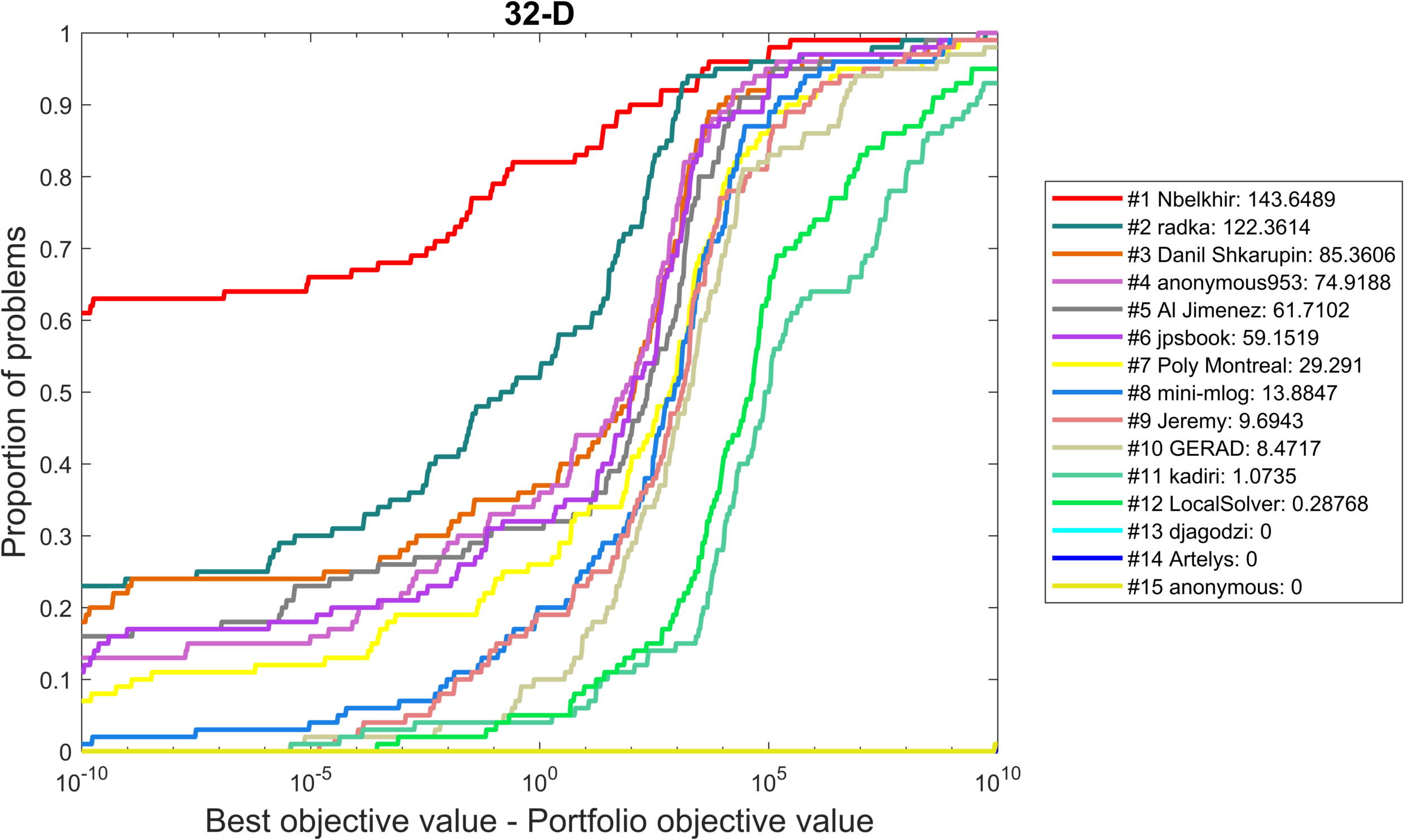

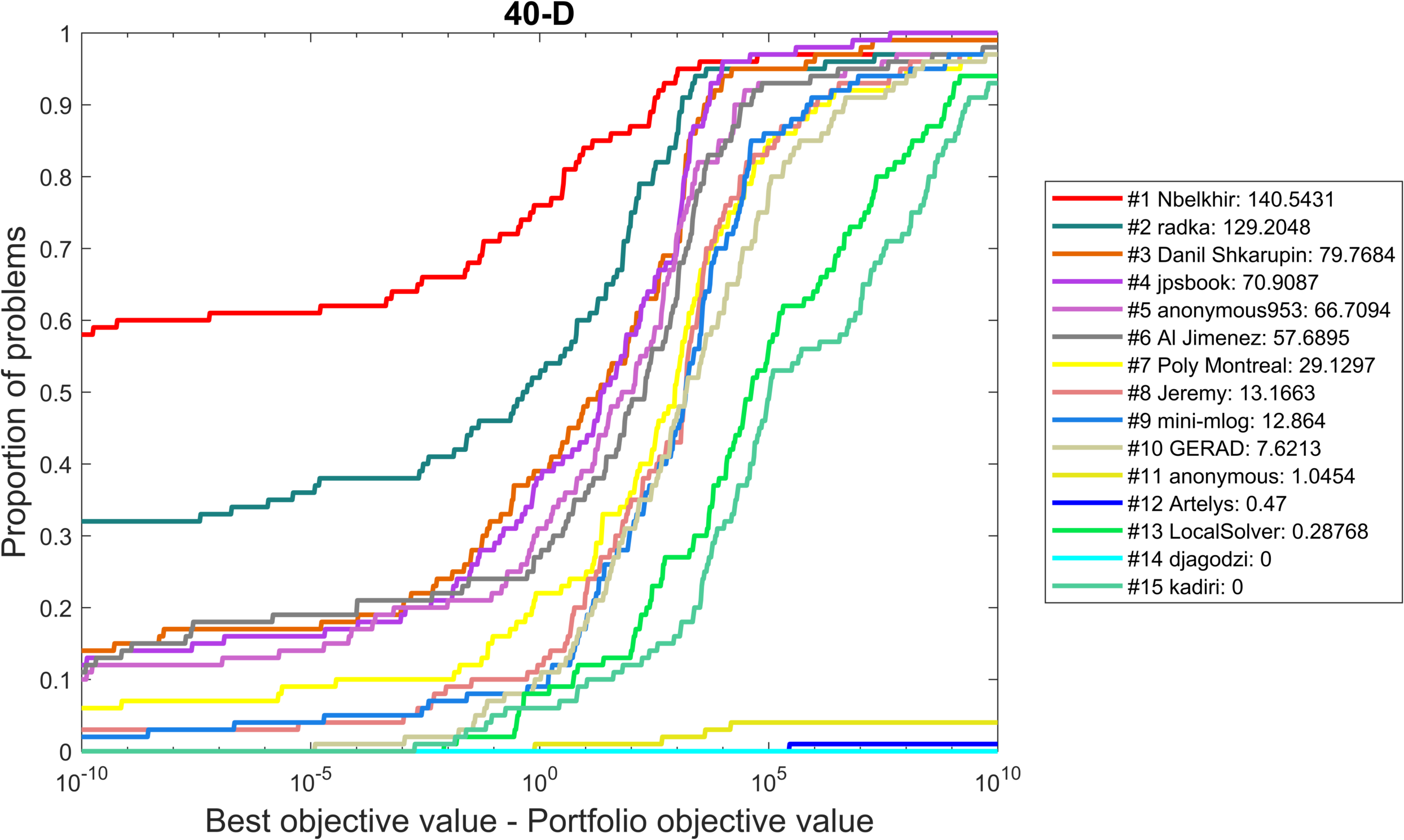

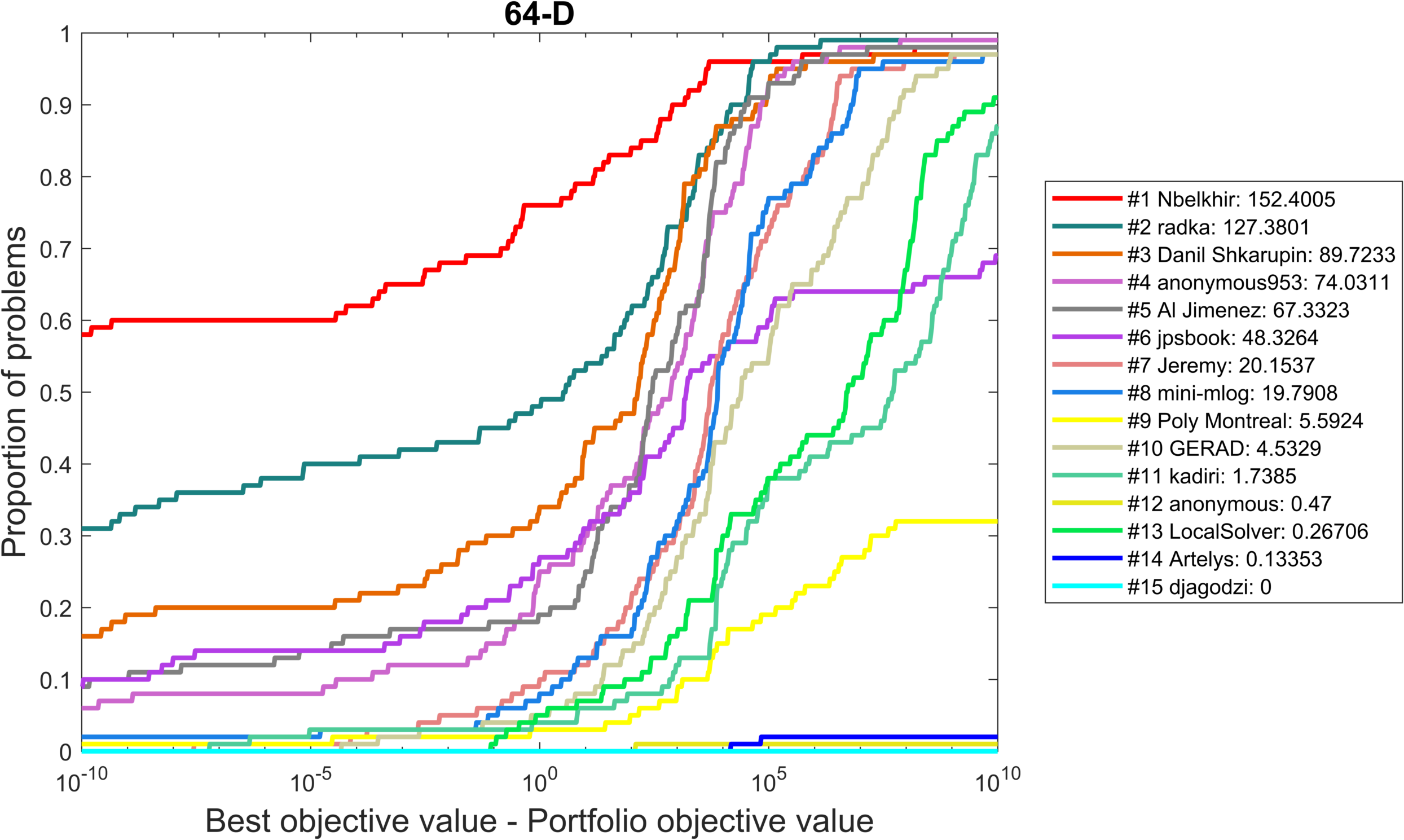

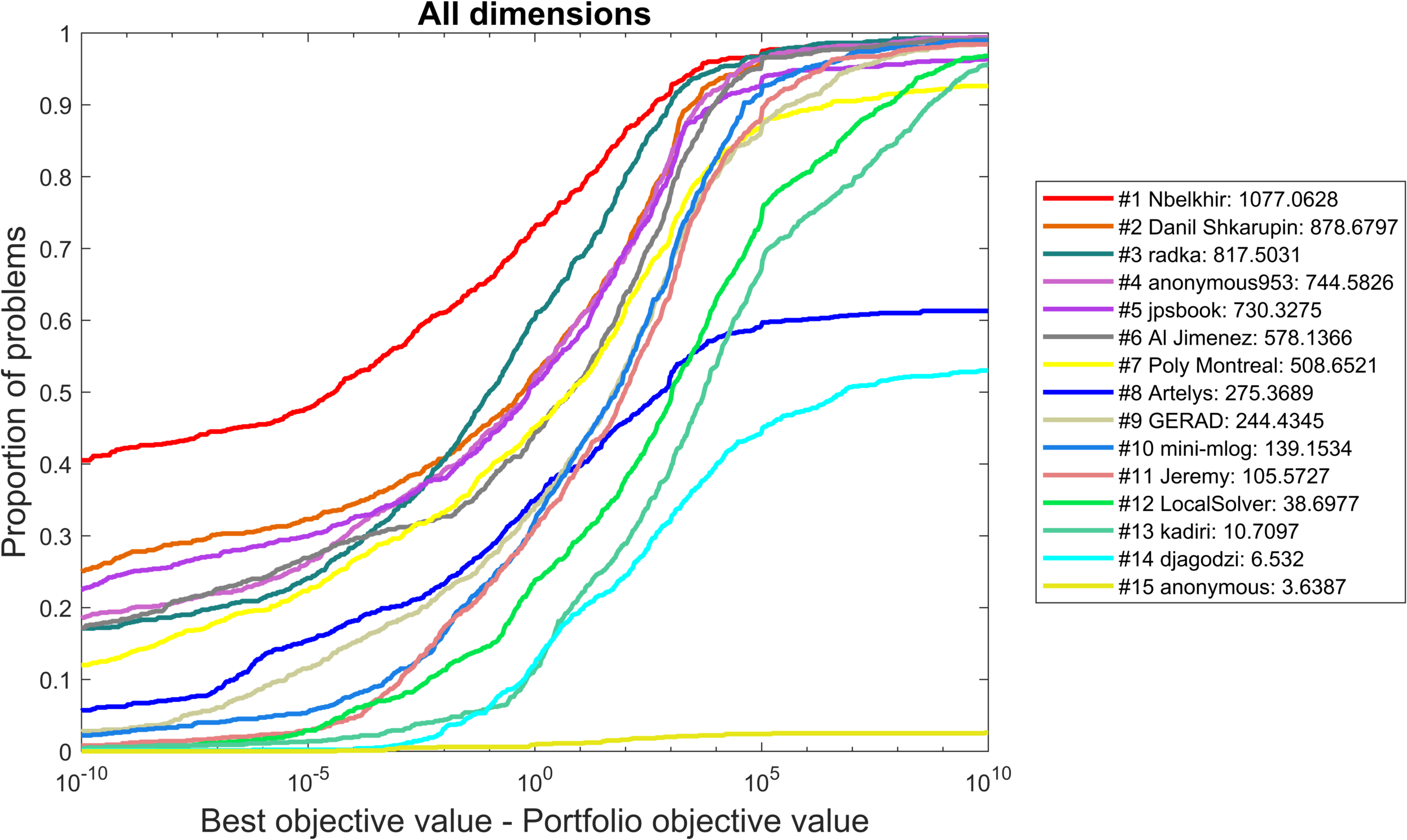

Visualization of Performance Data

The following figure shows an aggregated view on the performance data.

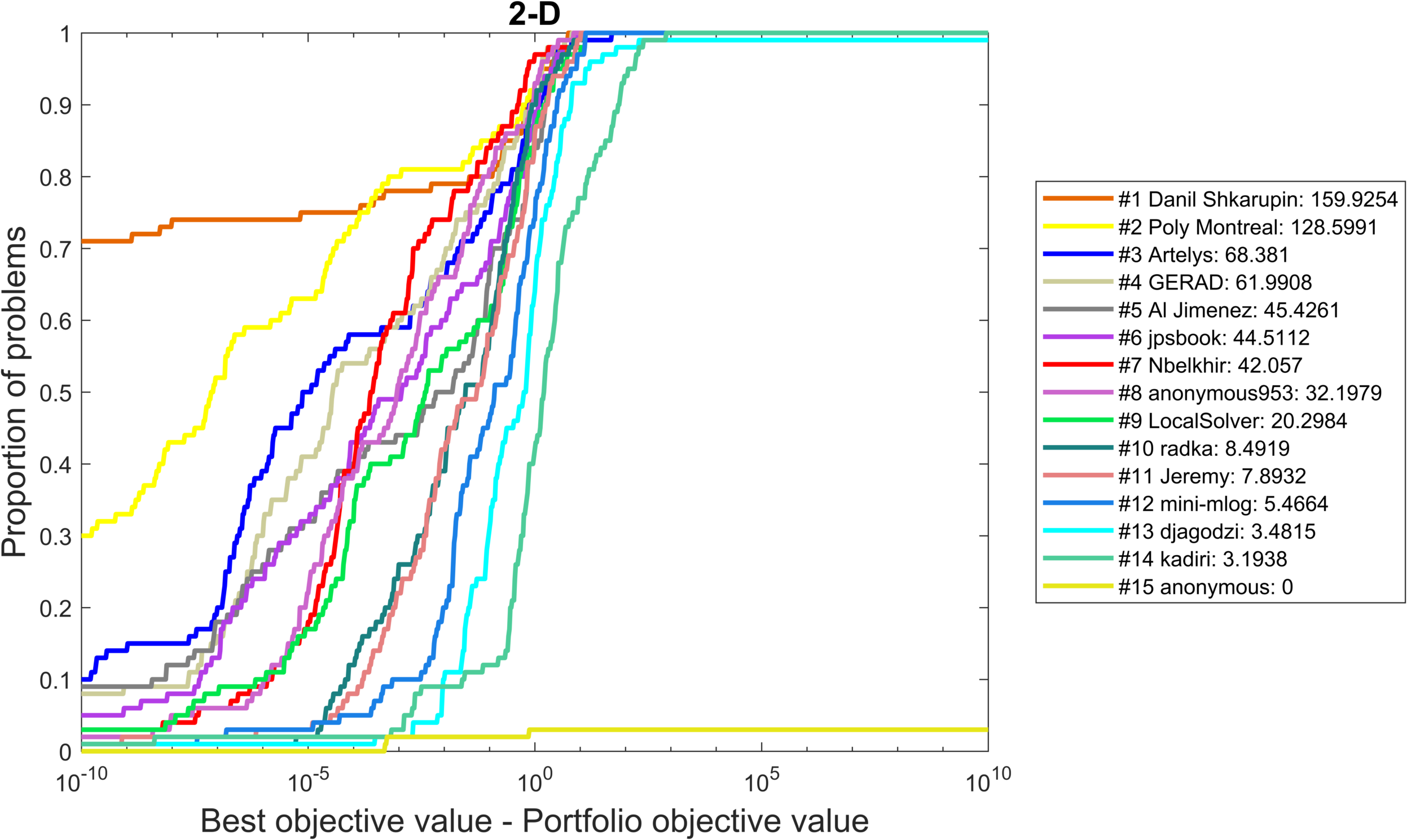

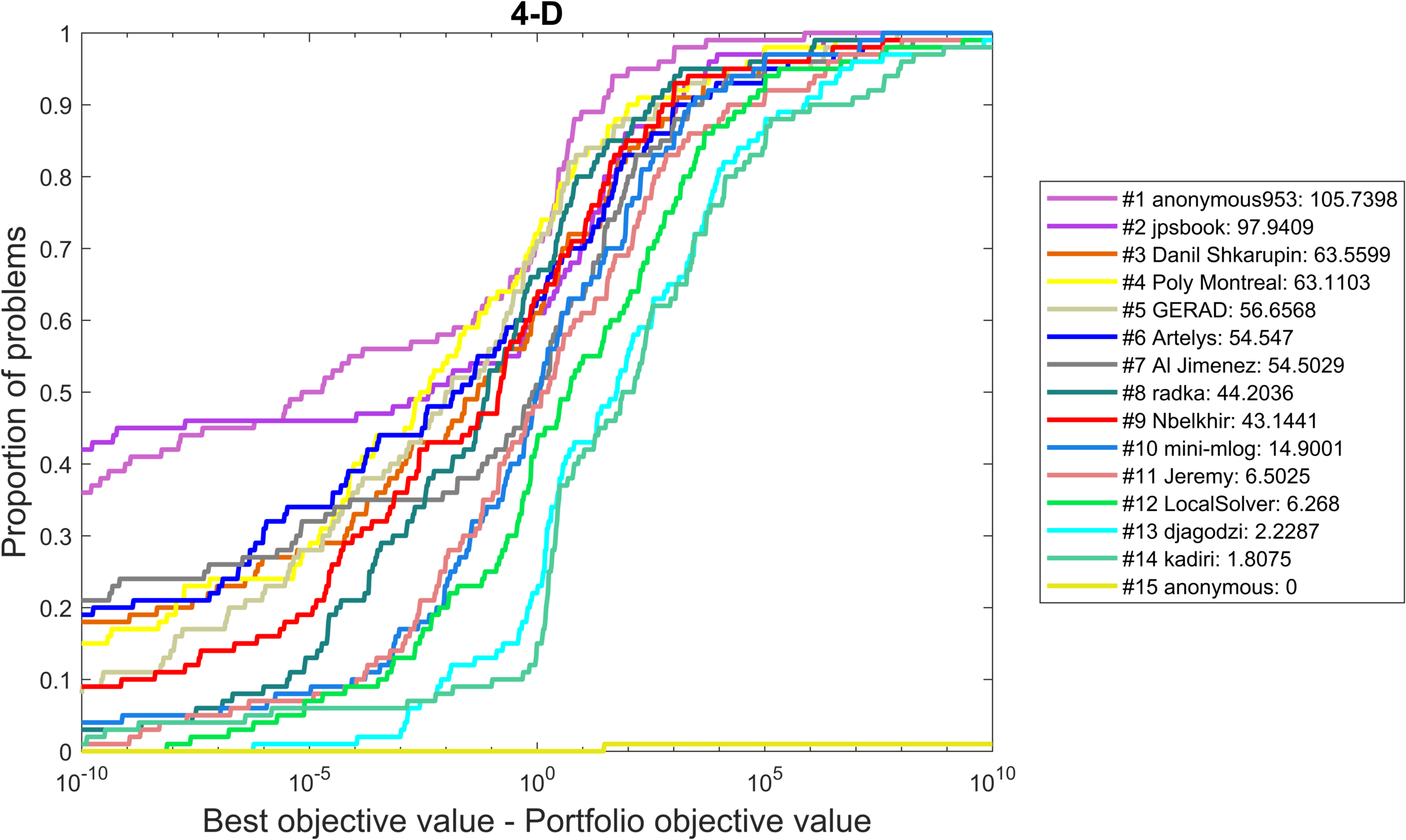

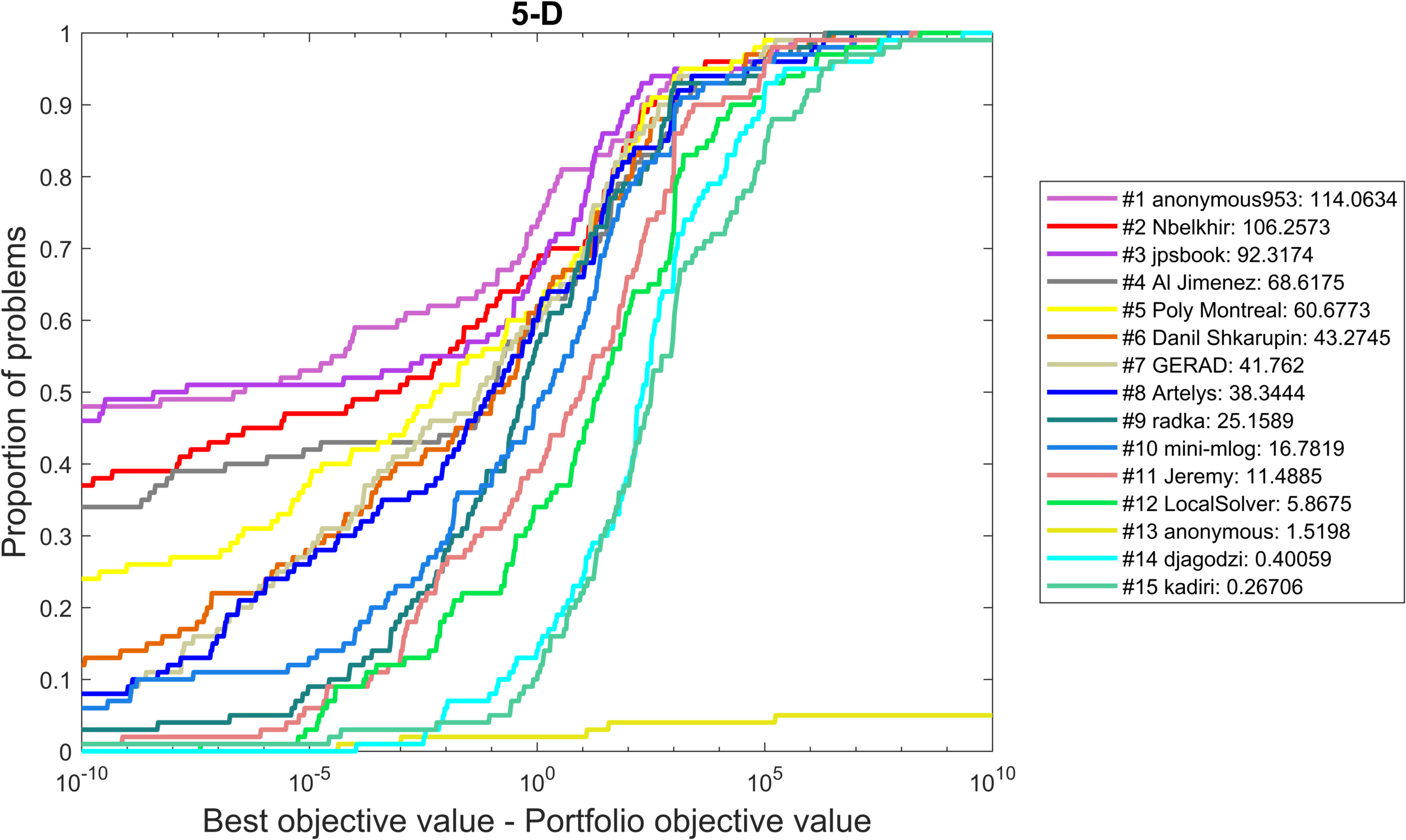

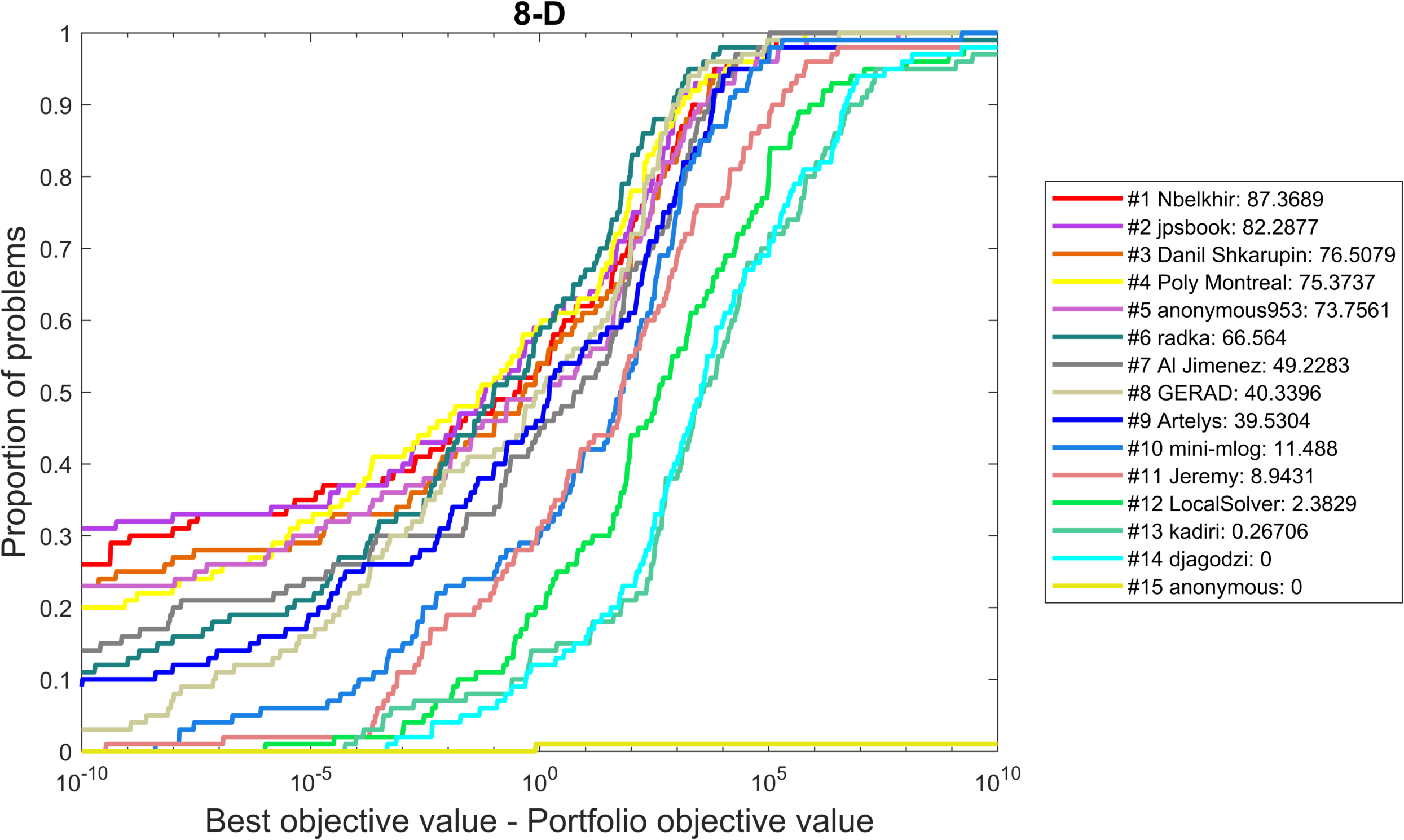

The following figures show the same data, but separately for each problem dimension.